Condensed notes from “Ethics of AI” at NYU, October 14-15th, 2016

Last October I attended the Ethics of AI conference at NYU. (link to program notes and videos). I’ve decided to publish some of my tweets and notes from the conference here.

The philosopher David Chalmers did an excellent job assembling a lineup of the leading thinkers who work on the AI control problem. Additionally, Daniel Khaneman (the Nobel prize winning psychologist) and Jurgen Schmidhuber (a famous AI expert and co-inventor of LSTM) happened to be in the area, and dropped in on the conference and were invited to participate in the panel on the 2nd day. The famous philosopher of mind, Thomas Nagal was also present in the audience, as was Ned Block. I enjoyed meeting and talking briefly with Eliezer Yudkowsky, Stephen Wolfram, Yann Le Cun, Kate Devlin, and Jaan Talinn.

Some takeaways I tweeted:

- The AI control problem (ie “making AI ethical”) is an exciting field of research at the intersection of ethics, philosophy of mind, and computer science.

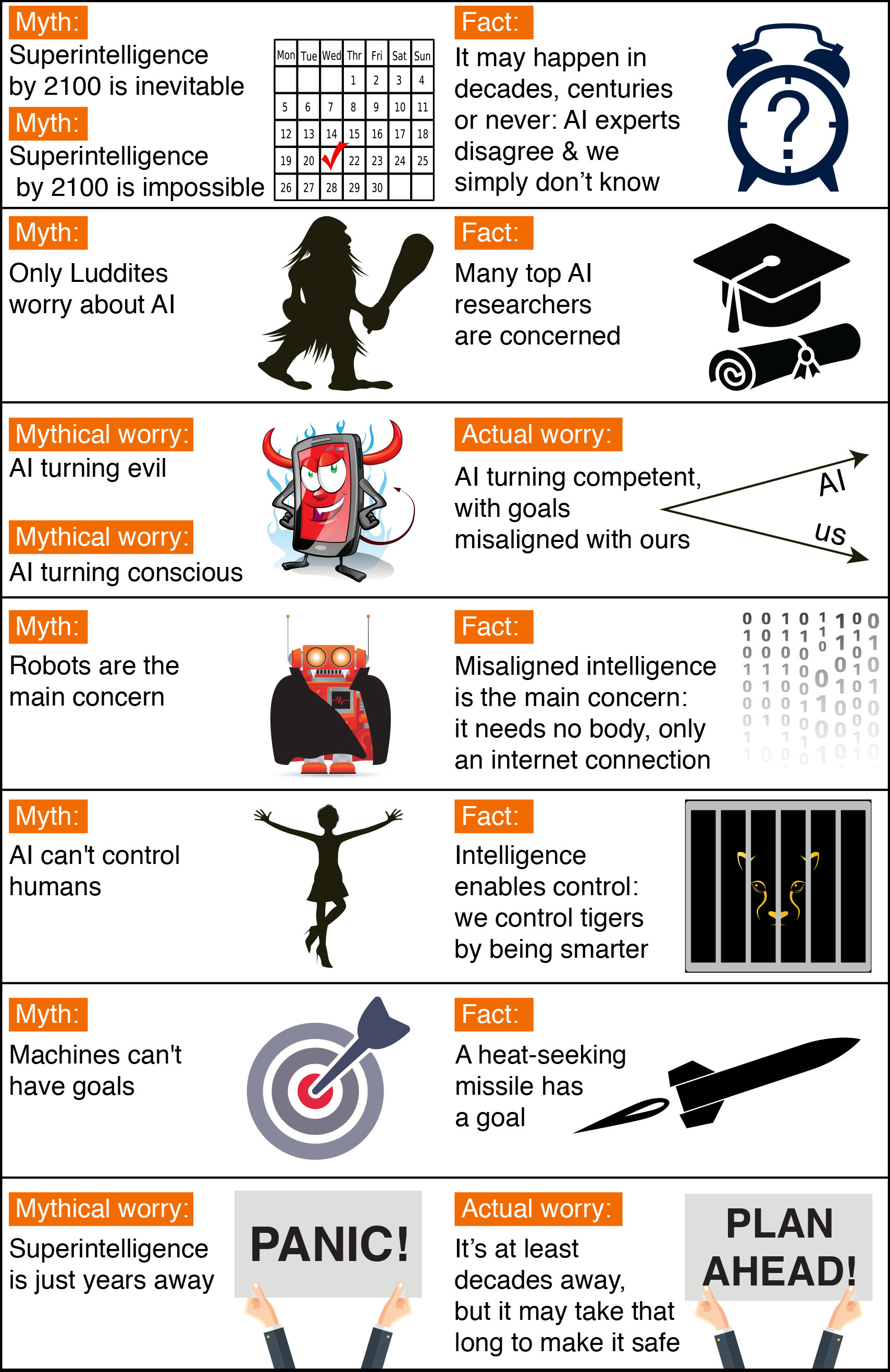

- Bostrom, day 1: The technical aspect of the AI control problem is likely easier to make progress on compared to the governance problem, therefore (if you have the choice) it’s better to work on the AI control problem than the governance problem, as it is easier to influence on the margins there. That said, it seems that Bostrom said he is thinking more about how to attack the governance problem. Another point that came up in day two is that we are blind to the full social context of AI research. We have very little idea how much AI research is currently hidden inside of military industrial complexes.

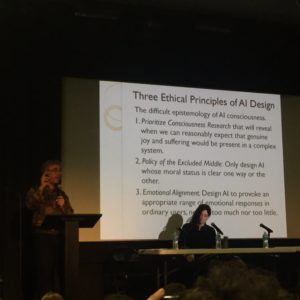

- Bostrom, day 1: Humanity is likely to commit mind crime in the future. Given how much trouble we have giving moral status to animals, the mass murder of sentient AI is likely in the future.

- Virginia Dignum, day 1 : we need more ethicists involved in technology. Currently technology is causing ‘moral overload’ – how do we balance “Posperity vs Sustainability”, “Security vs Privacy”, “Efficiency vs Safety”, “Accountability vs Confidentiality”.

- Yan Lecun, day 1 : “Something is “AI” until it is implemented, then it is not.

- Stuart Russell – one of the goals of Open AI is to move the ‘center of gravity’ of AI research outside of military research labs, so that we can see where along the development curve we are.

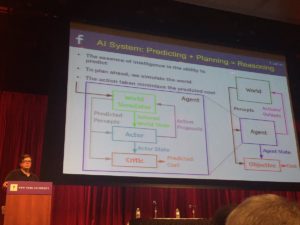

- Stuart Russel : value alignment will likely have to be achieved through some form of “inverse reinforcement learning” – learning the value function of a human being by observing their behaviour. Russel says the algorithms for this are already in place. Think of values as latent variables. The Probability Approximately Correct Learning framework can be applied to get PAC alignment.

- Stuart Russell : A problem with value alignment is that humans are “nasty, irrational, inconsistent, weak-willed, computationally limited and heterogeneous”. Can we use self-consistency to filter out unpleasant value systems. We will likely have to layer some metaethical constraints on top of inverse RL – self consistency and something like the categorical imperative.

- E.S. Yudkowsky – (my most retweeted tweet of the conference) “if the power differential is large, some agents in game theory have little incentive to cooperate”.

- E.S. Yudkowsky – popular conceptions of the AI safety problem in movies are highly misleading – a better example is the overflowing bucket scene from The Magician’s Apprentice. [full slides here]

- E.S. Yudkowsky: “AI alignment is difficult like space probes are difficult: once you have launched it it’s out there” (h/t Kate Devlin’s tweet)

- Max Tegmark – according to Seth Loyd, we are 33 orders of magnitude from the physical limits of computation.

- Wendell Wallach – coordination failures lead to disasters. If we don’t ban lethal autonomous weapons systems now, all bets are off.

- Wendell Wallach – The “bottom up” approach to value alignment looks at how moral decision making develops in children.

- Wendell Wallach – Engineers are hesitant to discuss ethics beyond “maximizing a utility function” because they view it as “politics by other means”. Yet pure utilitarianism can run roughshod over human rights. We need to be aware of the cognitive biases of engineers as well as their ethical biases. Spirituality is rarely considered. Do WEIRD (western, educated, industrialized, rich, democratic ) people have overly biased ethics? Is this a problem for value alignment?

- Steve Petersen: there is a slight flaw in the orthogonality thesis, at least in the low intelligence regime — sometimes the goals of low intelligence agents are incoherent or inconsistent. Less intelligent agents often have flawed ethical systems.

- Steve Petersen: the cool thing about considering super intelligent AI is it makes abstract ethical problems concrete, fast.

- S. Matthew Liao : “the right to bodily integrity” is a necessary precursor for developing ethical theories.

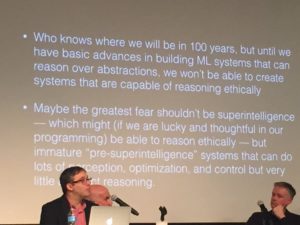

- Gary Marcus: Thinking ethically requires high levels of abstraction – theory of mind, envisioning the future, using abstract concepts like rights, duties, and obligation. Maybe we should be more worried about “teenage” AI that is powerful, but not yet capable of ethical reasoning.

- Thinking about ethical responsibility with AI is often reversed. We think AGIs should be in debt to us, because we created them. However, creating an AGI leads to a responsibility, just like a parent is responsible to look after a kid

- Kahneman: Our moral intuitions developed in a very limited environment. They are breaking down and will continue to break down as our environment changes.

- Ronald Sandler – there may be some experiments on AI that we shouldn’t do until we have proper protections in place How would informed consent for AIs work? Just by bringing it into existence, isn’t it being researched? How do we release an AI?

- Jaan Tallin – “When we spend $10 mil on a boxing match, we are not close to the Pareto frontier (as a society)”

Some highlights from the conference

Fun moments